Tasks¶

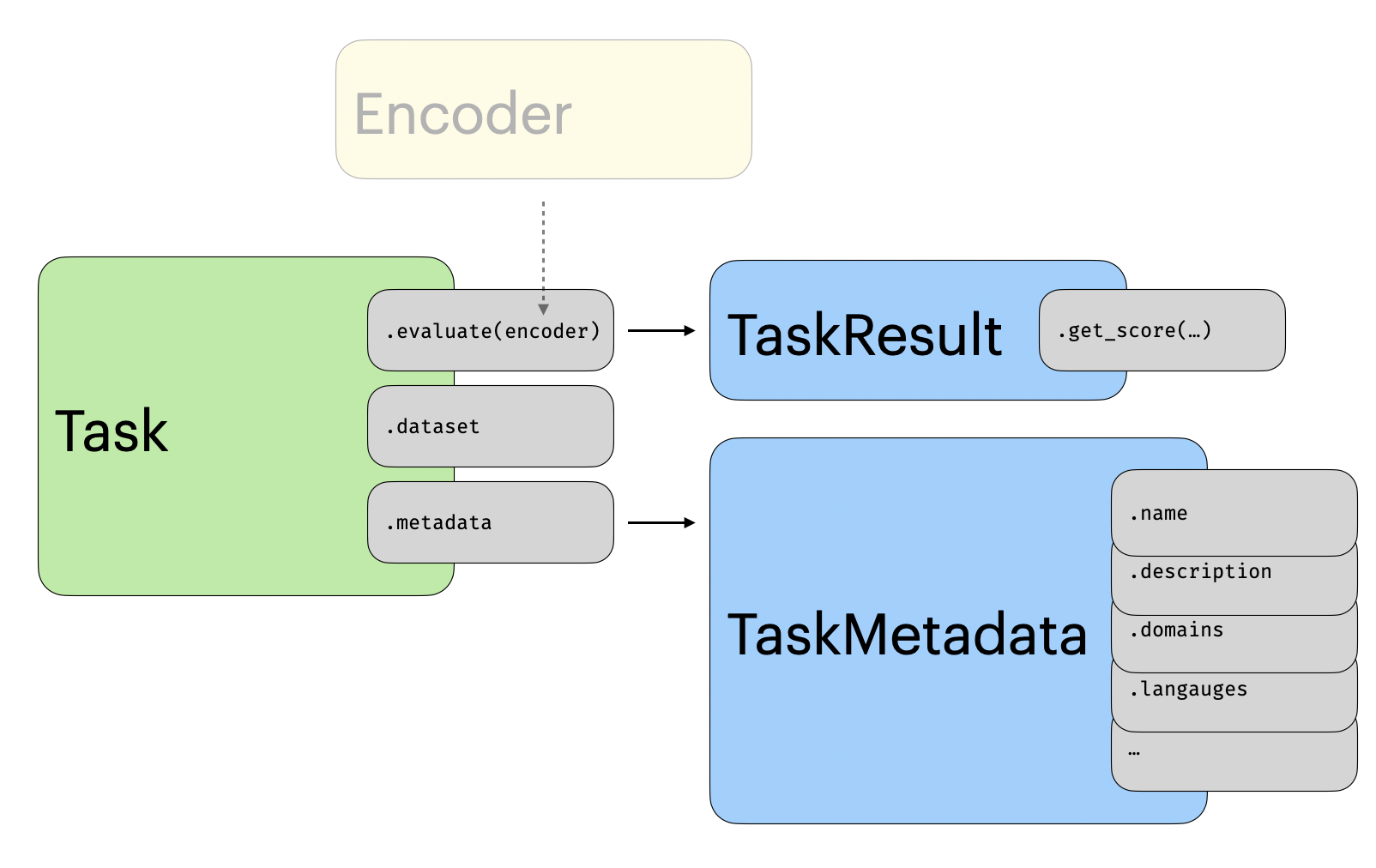

A task is an implementation of a dataset for evaluation. It could, for instance, be the MIRACL dataset consisting of queries, a corpus of documents ,and the correct documents to retrieve for a given query. In addition to the dataset, a task includes the specifications for how a model should be run on the dataset and how its output should be evaluated. Each task also comes with extensive metadata including the license, who annotated the data, etc.

mtebUtilities¶

mteb.get_tasks

¶

This script contains functions that are used to get an overview of the MTEB benchmark.

MTEBTasks

¶

Bases: tuple[AbsTask]

A tuple of tasks with additional methods to get an overview of the tasks.

Source code in mteb/get_tasks.py

82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 178 179 180 181 182 183 184 185 186 187 188 189 190 191 192 193 194 195 196 197 198 199 200 201 202 203 204 205 206 207 208 209 210 211 212 213 214 215 216 217 218 219 220 221 222 223 224 | |

languages

property

¶

Return all languages from tasks

count_languages()

¶

Summarize count of all languages from tasks

Returns:

| Type | Description |

|---|---|

Counter

|

Counter with language as key and count as value. |

Source code in mteb/get_tasks.py

106 107 108 109 110 111 112 113 114 115 | |

to_dataframe(properties=_DEFAULT_PROPRIETIES)

¶

Generate pandas DataFrame with tasks summary

Parameters:

| Name | Type | Description | Default |

|---|---|---|---|

properties

|

Sequence[str]

|

list of metadata to summarize from a Task class. |

_DEFAULT_PROPRIETIES

|

Returns:

| Type | Description |

|---|---|

DataFrame

|

pandas DataFrame. |

Source code in mteb/get_tasks.py

152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 | |

to_latex(properties=_DEFAULT_PROPRIETIES, group_indices=('type', 'name'), include_citation_in_name=True, limit_n_entries=3)

¶

Generate a LaTeX table of the tasks.

Parameters:

| Name | Type | Description | Default |

|---|---|---|---|

properties

|

Sequence[str]

|

list of metadata to summarize from a Task class. |

_DEFAULT_PROPRIETIES

|

group_indices

|

Sequence[str] | None

|

list of properties to group the table by. |

('type', 'name')

|

include_citation_in_name

|

bool

|

Whether to include the citation in the name. |

True

|

limit_n_entries

|

int | None

|

Limit the number of entries for cell values, e.g. number of languages and domains. Will use "..." to indicate that there are more entries. |

3

|

Returns:

| Type | Description |

|---|---|

str

|

string with a LaTeX table. |

Source code in mteb/get_tasks.py

182 183 184 185 186 187 188 189 190 191 192 193 194 195 196 197 198 199 200 201 202 203 204 205 206 207 208 209 210 211 212 213 214 215 216 217 218 219 220 221 222 223 224 | |

to_markdown(properties=_DEFAULT_PROPRIETIES, limit_n_entries=3)

¶

Generate markdown table with tasks summary

Parameters:

| Name | Type | Description | Default |

|---|---|---|---|

properties

|

Sequence[str]

|

list of metadata to summarize from a Task class. |

_DEFAULT_PROPRIETIES

|

limit_n_entries

|

int | None

|

Limit the number of entries for cell values, e.g. number of languages and domains. Will use "..." to indicate that there are more entries. |

3

|

Returns:

| Type | Description |

|---|---|

str

|

string with a markdown table. |

Source code in mteb/get_tasks.py

117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 | |

get_task(task_name, languages=None, script=None, eval_splits=None, hf_subsets=None, exclusive_language_filter=False)

¶

Get a task by name.

Parameters:

| Name | Type | Description | Default |

|---|---|---|---|

task_name

|

str

|

The name of the task to fetch. |

required |

languages

|

Sequence[str] | None

|

A list of languages either specified as 3 letter languages codes (ISO 639-3, e.g. "eng") or as script languages codes e.g. "eng-Latn". For multilingual tasks this will also remove languages that are not in the specified list. |

None

|

script

|

Sequence[str] | None

|

A list of script codes (ISO 15924 codes). If None, all scripts are included. For multilingual tasks this will also remove scripts |

None

|

eval_splits

|

Sequence[str] | None

|

A list of evaluation splits to include. If None, all splits are included. |

None

|

hf_subsets

|

Sequence[str] | None

|

A list of Huggingface subsets to evaluate on. |

None

|

exclusive_language_filter

|

bool

|

Some datasets contains more than one language e.g. for STS22 the subset "de-en" contain eng and deu. If exclusive_language_filter is set to False both of these will be kept, but if set to True only those that contains all the languages specified will be kept. |

False

|

Returns:

| Type | Description |

|---|---|

AbsTask

|

An initialized task object. |

Examples:

>>> get_task("BornholmBitextMining")

Source code in mteb/get_tasks.py

318 319 320 321 322 323 324 325 326 327 328 329 330 331 332 333 334 335 336 337 338 339 340 341 342 343 344 345 346 347 348 349 350 351 352 353 354 355 356 357 358 359 360 361 362 363 364 365 366 367 368 | |

get_tasks(tasks=None, *, languages=None, script=None, domains=None, task_types=None, categories=None, exclude_superseded=True, eval_splits=None, exclusive_language_filter=False, modalities=None, exclusive_modality_filter=False, exclude_aggregate=False, exclude_private=True)

¶

Get a list of tasks based on the specified filters.

Parameters:

| Name | Type | Description | Default |

|---|---|---|---|

tasks

|

Sequence[str] | None

|

A list of task names to include. If None, all tasks which pass the filters are included. If passed, other filters are ignored. |

None

|

languages

|

Sequence[str] | None

|

A list of languages either specified as 3 letter languages codes (ISO 639-3, e.g. "eng") or as script languages codes e.g. "eng-Latn". For multilingual tasks this will also remove languages that are not in the specified list. |

None

|

script

|

Sequence[str] | None

|

A list of script codes (ISO 15924 codes, e.g. "Latn"). If None, all scripts are included. For multilingual tasks this will also remove scripts that are not in the specified list. |

None

|

domains

|

Sequence[TaskDomain] | None

|

A list of task domains, e.g. "Legal", "Medical", "Fiction". |

None

|

task_types

|

Sequence[TaskType] | None

|

A string specifying the type of task e.g. "Classification" or "Retrieval". If None, all tasks are included. |

None

|

categories

|

Sequence[TaskCategory] | None

|

A list of task categories these include "t2t" (text to text), "t2i" (text to image). See TaskMetadata for the full list. |

None

|

exclude_superseded

|

bool

|

A boolean flag to exclude datasets which are superseded by another. |

True

|

eval_splits

|

Sequence[str] | None

|

A list of evaluation splits to include. If None, all splits are included. |

None

|

exclusive_language_filter

|

bool

|

Some datasets contains more than one language e.g. for STS22 the subset "de-en" contain eng and deu. If exclusive_language_filter is set to False both of these will be kept, but if set to True only those that contains all the languages specified will be kept. |

False

|

modalities

|

Sequence[Modalities] | None

|

A list of modalities to include. If None, all modalities are included. |

None

|

exclusive_modality_filter

|

bool

|

If True, only keep tasks where all filter modalities are included in the task's modalities and ALL task modalities are in filter modalities (exact match). If False, keep tasks if any of the task's modalities match the filter modalities. |

False

|

exclude_aggregate

|

bool

|

If True, exclude aggregate tasks. If False, both aggregate and non-aggregate tasks are returned. |

False

|

exclude_private

|

bool

|

If True (default), exclude private/closed datasets (is_public=False). If False, include both public and private datasets. |

True

|

Returns:

| Type | Description |

|---|---|

MTEBTasks

|

A list of all initialized tasks objects which pass all of the filters (AND operation). |

Examples:

>>> get_tasks(languages=["eng", "deu"], script=["Latn"], domains=["Legal"])

>>> get_tasks(languages=["eng"], script=["Latn"], task_types=["Classification"])

>>> get_tasks(languages=["eng"], script=["Latn"], task_types=["Clustering"], exclude_superseded=False)

>>> get_tasks(languages=["eng"], tasks=["WikipediaRetrievalMultilingual"], eval_splits=["test"])

>>> get_tasks(tasks=["STS22"], languages=["eng"], exclusive_language_filter=True) # don't include multilingual subsets containing English

Source code in mteb/get_tasks.py

227 228 229 230 231 232 233 234 235 236 237 238 239 240 241 242 243 244 245 246 247 248 249 250 251 252 253 254 255 256 257 258 259 260 261 262 263 264 265 266 267 268 269 270 271 272 273 274 275 276 277 278 279 280 281 282 283 284 285 286 287 288 289 290 291 292 293 294 295 296 297 298 299 300 301 302 303 304 305 306 307 308 309 310 311 312 | |

mteb.get_task(task_name, languages=None, script=None, eval_splits=None, hf_subsets=None, exclusive_language_filter=False)

¶

Get a task by name.

Parameters:

| Name | Type | Description | Default |

|---|---|---|---|

task_name

|

str

|

The name of the task to fetch. |

required |

languages

|

Sequence[str] | None

|

A list of languages either specified as 3 letter languages codes (ISO 639-3, e.g. "eng") or as script languages codes e.g. "eng-Latn". For multilingual tasks this will also remove languages that are not in the specified list. |

None

|

script

|

Sequence[str] | None

|

A list of script codes (ISO 15924 codes). If None, all scripts are included. For multilingual tasks this will also remove scripts |

None

|

eval_splits

|

Sequence[str] | None

|

A list of evaluation splits to include. If None, all splits are included. |

None

|

hf_subsets

|

Sequence[str] | None

|

A list of Huggingface subsets to evaluate on. |

None

|

exclusive_language_filter

|

bool

|

Some datasets contains more than one language e.g. for STS22 the subset "de-en" contain eng and deu. If exclusive_language_filter is set to False both of these will be kept, but if set to True only those that contains all the languages specified will be kept. |

False

|

Returns:

| Type | Description |

|---|---|

AbsTask

|

An initialized task object. |

Examples:

>>> get_task("BornholmBitextMining")

Source code in mteb/get_tasks.py

318 319 320 321 322 323 324 325 326 327 328 329 330 331 332 333 334 335 336 337 338 339 340 341 342 343 344 345 346 347 348 349 350 351 352 353 354 355 356 357 358 359 360 361 362 363 364 365 366 367 368 | |

mteb.filter_tasks

¶

This script contains functions that are used to get an overview of the MTEB benchmark.

filter_tasks(tasks, *, languages=None, script=None, domains=None, task_types=None, categories=None, modalities=None, exclusive_modality_filter=False, exclude_superseded=False, exclude_aggregate=False, exclude_private=False)

¶

filter_tasks(

tasks: Iterable[AbsTask],

*,

languages: Sequence[str] | None = None,

script: Sequence[str] | None = None,

domains: Iterable[TaskDomain] | None = None,

task_types: Iterable[TaskType] | None = None,

categories: Iterable[TaskCategory] | None = None,

modalities: Iterable[Modalities] | None = None,

exclusive_modality_filter: bool = False,

exclude_superseded: bool = False,

exclude_aggregate: bool = False,

exclude_private: bool = False,

) -> list[AbsTask]

filter_tasks(

tasks: Iterable[type[AbsTask]],

*,

languages: Sequence[str] | None = None,

script: Sequence[str] | None = None,

domains: Iterable[TaskDomain] | None = None,

task_types: Iterable[TaskType] | None = None,

categories: Iterable[TaskCategory] | None = None,

modalities: Iterable[Modalities] | None = None,

exclusive_modality_filter: bool = False,

exclude_superseded: bool = False,

exclude_aggregate: bool = False,

exclude_private: bool = False,

) -> list[type[AbsTask]]

Filter tasks based on the specified criteria.

Parameters:

| Name | Type | Description | Default |

|---|---|---|---|

tasks

|

Iterable[AbsTask] | Iterable[type[AbsTask]]

|

A list of task names to include. If None, all tasks which pass the filters are included. If passed, other filters are ignored. |

required |

languages

|

Sequence[str] | None

|

A list of languages either specified as 3 letter languages codes (ISO 639-3, e.g. "eng") or as script languages codes e.g. "eng-Latn". For multilingual tasks this will also remove languages that are not in the specified list. |

None

|

script

|

Sequence[str] | None

|

A list of script codes (ISO 15924 codes, e.g. "Latn"). If None, all scripts are included. For multilingual tasks this will also remove scripts that are not in the specified list. |

None

|

domains

|

Iterable[TaskDomain] | None

|

A list of task domains, e.g. "Legal", "Medical", "Fiction". |

None

|

task_types

|

Iterable[TaskType] | None

|

A string specifying the type of task e.g. "Classification" or "Retrieval". If None, all tasks are included. |

None

|

categories

|

Iterable[TaskCategory] | None

|

A list of task categories these include "t2t" (text to text), "t2i" (text to image). See TaskMetadata for the full list. |

None

|

exclude_superseded

|

bool

|

A boolean flag to exclude datasets which are superseded by another. |

False

|

modalities

|

Iterable[Modalities] | None

|

A list of modalities to include. If None, all modalities are included. |

None

|

exclusive_modality_filter

|

bool

|

If True, only keep tasks where all filter modalities are included in the task's modalities and ALL task modalities are in filter modalities (exact match). If False, keep tasks if any of the task's modalities match the filter modalities. |

False

|

exclude_aggregate

|

bool

|

If True, exclude aggregate tasks. If False, both aggregate and non-aggregate tasks are returned. |

False

|

exclude_private

|

bool

|

If True (default), exclude private/closed datasets (is_public=False). If False, include both public and private datasets. |

False

|

Returns:

| Type | Description |

|---|---|

list[AbsTask] | list[type[AbsTask]]

|

A list of tasks objects which pass all of the filters. |

Examples:

>>> text_classification_tasks = filter_tasks(my_tasks, task_types=["Classification"], modalities=["text"])

>>> medical_tasks = filter_tasks(my_tasks, domains=["Medical"])

>>> english_tasks = filter_tasks(my_tasks, languages=["eng"])

>>> latin_script_tasks = filter_tasks(my_tasks, script=["Latn"])

>>> text_image_tasks = filter_tasks(my_tasks, modalities=["text", "image"], exclusive_modality_filter=True)

Source code in mteb/filter_tasks.py

74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 178 179 180 181 182 183 184 185 | |

Metadata¶

Each task also contains extensive metadata. We annotate this using the following object, which allows us to use pydantic to validate the metadata.

mteb.TaskMetadata

¶

Bases: BaseModel

Metadata for a task.

Attributes:

| Name | Type | Description |

|---|---|---|

dataset |

MetadataDatasetDict

|

All arguments to pass to datasets.load_dataset to load the dataset for the task. |

name |

str

|

The name of the task. |

description |

str

|

A description of the task. |

type |

TaskType

|

The type of the task. This includes "Classification", "Summarization", "STS", "Retrieval", "Reranking", "Clustering", "PairClassification", "BitextMining". The type should match the abstask type. |

category |

TaskCategory | None

|

The category of the task. E.g. includes "t2t" (text to text), "t2i" (text to image). |

reference |

StrURL | None

|

A URL to the documentation of the task. E.g. a published paper. |

eval_splits |

list[str]

|

The splits of the dataset used for evaluation. |

eval_langs |

Languages

|

The languages of the dataset used for evaluation. Languages follows a ETF BCP 47 standard consisting of "{language}-{script}" tag (e.g. "eng-Latn"). Where language is specified as a list of ISO 639-3 language codes (e.g. "eng") followed by ISO 15924 script codes (e.g. "Latn"). Can be either a list of languages or a dictionary mapping huggingface subsets to lists of languages (e.g. if a the huggingface dataset contain different languages). |

main_score |

str

|

The main score used for evaluation. |

date |

tuple[StrDate, StrDate] | None

|

The date when the data was collected. Specified as a tuple of two dates. |

domains |

list[TaskDomain] | None

|

The domains of the data. This includes "Non-fiction", "Social", "Fiction", "News", "Academic", "Blog", "Encyclopaedic", "Government", "Legal", "Medical", "Poetry", "Religious", "Reviews", "Web", "Spoken", "Written". A dataset can belong to multiple domains. |

task_subtypes |

list[TaskSubtype] | None

|

The subtypes of the task. E.g. includes "Sentiment/Hate speech", "Thematic Clustering". Feel free to update the list as needed. |

license |

Licenses | StrURL | None

|

The license of the data specified as lowercase, e.g. "cc-by-nc-4.0". If the license is not specified, use "not specified". For custom licenses a URL is used. |

annotations_creators |

AnnotatorType | None

|

The type of the annotators. Includes "expert-annotated" (annotated by experts), "human-annotated" (annotated e.g. by mturkers), "derived" (derived from structure in the data). |

dialect |

list[str] | None

|

The dialect of the data, if applicable. Ideally specified as a BCP-47 language tag. Empty list if no dialects are present. |

sample_creation |

SampleCreationMethod | None

|

The method of text creation. Includes "found", "created", "machine-translated", "machine-translated and verified", and "machine-translated and localized". |

prompt |

str | PromptDict | None

|

The prompt used for the task. Can be a string or a dictionary containing the query and passage prompts. |

bibtex_citation |

str | None

|

The BibTeX citation for the dataset. Should be an empty string if no citation is available. |

adapted_from |

Sequence[str] | None

|

Datasets adapted (translated, sampled from, etc.) from other datasets. |

is_public |

bool

|

Whether the dataset is publicly available. If False (closed/private), a HuggingFace token is required to run the datasets. |

superseded_by |

str | None

|

Denotes the task that this task is superseded by. Used to issue warning to users of outdated datasets, while maintaining reproducibility of existing benchmarks. |

Source code in mteb/abstasks/task_metadata.py

237 238 239 240 241 242 243 244 245 246 247 248 249 250 251 252 253 254 255 256 257 258 259 260 261 262 263 264 265 266 267 268 269 270 271 272 273 274 275 276 277 278 279 280 281 282 283 284 285 286 287 288 289 290 291 292 293 294 295 296 297 298 299 300 301 302 303 304 305 306 307 308 309 310 311 312 313 314 315 316 317 318 319 320 321 322 323 324 325 326 327 328 329 330 331 332 333 334 335 336 337 338 339 340 341 342 343 344 345 346 347 348 349 350 351 352 353 354 355 356 357 358 359 360 361 362 363 364 365 366 367 368 369 370 371 372 373 374 375 376 377 378 379 380 381 382 383 384 385 386 387 388 389 390 391 392 393 394 395 396 397 398 399 400 401 402 403 404 405 406 407 408 409 410 411 412 413 414 415 416 417 418 419 420 421 422 423 424 425 426 427 428 429 430 431 432 433 434 435 436 437 438 439 440 441 442 443 444 445 446 447 448 449 450 451 452 453 454 455 456 457 458 459 460 461 462 463 464 465 466 467 468 469 470 471 472 473 474 475 476 477 478 479 480 481 482 483 484 485 486 487 488 489 490 491 492 493 494 495 496 497 498 499 500 501 502 503 504 505 506 507 508 509 510 511 512 513 514 515 516 517 518 519 520 521 522 523 524 525 526 527 528 529 530 531 532 533 534 535 536 537 538 539 540 541 542 543 544 545 546 547 548 549 550 551 552 553 554 555 556 557 558 559 560 561 562 563 564 565 566 567 568 569 570 571 572 573 574 575 576 577 578 579 580 581 582 583 584 585 586 587 588 589 590 591 592 593 594 595 596 597 598 599 600 601 602 603 604 605 606 607 608 609 610 611 612 613 614 615 616 617 618 619 620 621 622 623 624 625 626 627 628 629 630 631 632 633 634 635 636 637 638 639 640 641 642 643 644 645 646 647 648 649 650 651 652 653 654 655 656 657 658 659 660 661 662 663 664 665 666 667 668 669 670 671 672 673 674 675 676 677 678 679 680 681 682 683 684 685 686 687 688 689 690 691 692 693 694 695 696 697 698 699 700 701 702 703 704 705 706 707 708 709 710 711 712 713 714 715 716 717 718 719 720 721 722 723 724 725 726 727 728 729 730 731 732 733 | |

bcp47_codes

property

¶

Return the languages and script codes of the dataset formatting in accordance with the BCP-47 standard.

descriptive_stat_path

property

¶

Return the path to the descriptive statistics file.

descriptive_stats

property

¶

Return the descriptive statistics for the dataset.

hf_subsets

property

¶

Return the huggingface subsets.

hf_subsets_to_langscripts

property

¶

Return a dictionary mapping huggingface subsets to languages.

intext_citation

property

¶

Create an in-text citation for the dataset.

is_multilingual

property

¶

Check if the task is multilingual.

languages

property

¶

Return the languages of the dataset as iso639-3 codes.

n_samples

property

¶

Returns the number of samples in the dataset

revision

property

¶

Return the dataset revision.

scripts

property

¶

Return the scripts of the dataset as iso15924 codes.

generate_dataset_card(existing_dataset_card=None)

¶

Generates a dataset card for the task.

Parameters:

| Name | Type | Description | Default |

|---|---|---|---|

existing_dataset_card

|

DatasetCard | None

|

The existing dataset card to update. If None, a new dataset card will be created. |

None

|

Returns:

| Name | Type | Description |

|---|---|---|

DatasetCard |

DatasetCard

|

The dataset card for the task. |

Source code in mteb/abstasks/task_metadata.py

554 555 556 557 558 559 560 561 562 563 564 565 566 567 568 569 570 571 572 573 574 575 576 577 578 | |

get_modalities(prompt_type=None)

¶

Get the modalities for the task based category if prompt_type provided.

Parameters:

| Name | Type | Description | Default |

|---|---|---|---|

prompt_type

|

PromptType | None

|

The prompt type to get the modalities for. |

None

|

Returns:

| Type | Description |

|---|---|

list[Modalities]

|

A list of modalities for the task. |

Raises:

| Type | Description |

|---|---|

ValueError

|

If the prompt type is not recognized. |

Source code in mteb/abstasks/task_metadata.py

449 450 451 452 453 454 455 456 457 458 459 460 461 462 463 464 465 466 467 468 469 470 471 472 473 474 475 476 477 | |

is_filled()

¶

Check if all the metadata fields are filled.

Returns:

| Type | Description |

|---|---|

bool

|

True if all the metadata fields are filled, False otherwise. |

Source code in mteb/abstasks/task_metadata.py

363 364 365 366 367 368 369 370 371 372 373 | |

push_dataset_card_to_hub(repo_name)

¶

Pushes the dataset card to the huggingface hub.

Parameters:

| Name | Type | Description | Default |

|---|---|---|---|

repo_name

|

str

|

The name of the repository to push the dataset card to. |

required |

Source code in mteb/abstasks/task_metadata.py

580 581 582 583 584 585 586 587 588 589 590 591 592 593 594 | |

Metadata Types¶

mteb.abstasks.task_metadata.AnnotatorType = Literal['expert-annotated', 'human-annotated', 'derived', 'LM-generated', 'LM-generated and reviewed']

module-attribute

¶

The type of the annotators. Is often important for understanding the quality of a dataset.

mteb.abstasks.task_metadata.SampleCreationMethod = Literal['found', 'created', 'created and machine-translated', 'human-translated and localized', 'human-translated', 'machine-translated', 'machine-translated and verified', 'machine-translated and localized', 'LM-generated and verified', 'machine-translated and LM verified', 'rendered', 'multiple']

module-attribute

¶

How the text was created. It can be an important factor for understanding the quality of a dataset. E.g. used to filter out machine-translated datasets.

mteb.abstasks.task_metadata.TaskCategory = Literal['t2t', 't2c', 'i2i', 'i2c', 'i2t', 't2i', 'it2t', 'it2i', 'i2it', 't2it', 'it2it']

module-attribute

¶

The category of the task.

- t2t: text to text

- t2c: text to category

- i2i: image to image

- i2c: image to category

- i2t: image to text

- t2i: text to image

- it2t: image+text to text

- it2i: image+text to image

- i2it: image to image+text

- t2it: text to image+text

- it2it: image+text to image+text

mteb.abstasks.task_metadata.TaskDomain = Literal['Academic', 'Blog', 'Constructed', 'Encyclopaedic', 'Engineering', 'Fiction', 'Government', 'Legal', 'Medical', 'News', 'Non-fiction', 'Poetry', 'Religious', 'Reviews', 'Scene', 'Social', 'Spoken', 'Subtitles', 'Web', 'Written', 'Programming', 'Chemistry', 'Financial', 'Entertainment']

module-attribute

¶

The domains follow the categories used in the Universal Dependencies project, though we updated them where deemed appropriate. These do not have to be mutually exclusive.

mteb.abstasks.task_metadata.TaskType = Literal[_TASK_TYPE]

module-attribute

¶

The type of the task. E.g. includes "Classification", "Retrieval" and "Clustering".

mteb.abstasks.task_metadata.TaskSubtype = Literal['Article retrieval', 'Patent retrieval', 'Conversational retrieval', 'Dialect pairing', 'Dialog Systems', 'Discourse coherence', 'Duplicate Image Retrieval', 'Language identification', 'Linguistic acceptability', 'Political classification', 'Question answering', 'Sentiment/Hate speech', 'Thematic clustering', 'Scientific Reranking', 'Claim verification', 'Topic classification', 'Code retrieval', 'False Friends', 'Cross-Lingual Semantic Discrimination', 'Textual Entailment', 'Counterfactual Detection', 'Emotion classification', 'Reasoning as Retrieval', 'Rendered Texts Understanding', 'Image Text Retrieval', 'Object recognition', 'Scene recognition', 'Caption Pairing', 'Emotion recognition', 'Textures recognition', 'Activity recognition', 'Tumor detection', 'Duplicate Detection', 'Rendered semantic textual similarity', 'Intent classification']

module-attribute

¶

The subtypes of the task. E.g. includes "Sentiment/Hate speech", "Thematic Clustering". This list can be updated as needed.

mteb.abstasks.task_metadata.PromptDict = TypedDict('PromptDict', {(prompt_type.value): str for prompt_type in PromptType}, total=False)

module-attribute

¶

A dictionary containing the prompt used for the task.

Attributes:

| Name | Type | Description |

|---|---|---|

query |

The prompt used for the queries in the task. |

|

document |

The prompt used for the passages in the task. |

The Task Object¶

All tasks in mteb inherits from the following abstract class.

mteb.AbsTask

¶

Bases: ABC

The abstract class for the tasks

Attributes:

| Name | Type | Description |

|---|---|---|

metadata |

TaskMetadata

|

The metadata describing the task |

dataset |

dict[HFSubset, DatasetDict] | None

|

The dataset represented as a dictionary on the form {"hf subset": {"split": Dataset}} where "split" is the dataset split (e.g. "test") and Dataset is a datasets.Dataset object. "hf subset" is the data subset on Huggingface typically used to denote the language e.g. datasets.load_dataset("data", "en"). If the dataset does not have a subset this is simply "default". |

seed |

The random seed used for reproducibility. |

|

hf_subsets |

list[HFSubset]

|

The list of Huggingface subsets to use. |

data_loaded |

bool

|

Denotes if the dataset is loaded or not. This is used to avoid loading the dataset multiple times. |

abstask_prompt |

str

|

Prompt to use for the task for instruction model if not prompt is provided in TaskMetadata.prompt. |

fast_loading |

bool

|

Deprecated. Denotes if the task should be loaded using the fast loading method. This is only possible if the dataset have a "default" config. We don't recommend to use this method, and suggest to use different subsets for loading datasets. This was used only for historical reasons and will be removed in the future. |

Source code in mteb/abstasks/abstask.py

73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 178 179 180 181 182 183 184 185 186 187 188 189 190 191 192 193 194 195 196 197 198 199 200 201 202 203 204 205 206 207 208 209 210 211 212 213 214 215 216 217 218 219 220 221 222 223 224 225 226 227 228 229 230 231 232 233 234 235 236 237 238 239 240 241 242 243 244 245 246 247 248 249 250 251 252 253 254 255 256 257 258 259 260 261 262 263 264 265 266 267 268 269 270 271 272 273 274 275 276 277 278 279 280 281 282 283 284 285 286 287 288 289 290 291 292 293 294 295 296 297 298 299 300 301 302 303 304 305 306 307 308 309 310 311 312 313 314 315 316 317 318 319 320 321 322 323 324 325 326 327 328 329 330 331 332 333 334 335 336 337 338 339 340 341 342 343 344 345 346 347 348 349 350 351 352 353 354 355 356 357 358 359 360 361 362 363 364 365 366 367 368 369 370 371 372 373 374 375 376 377 378 379 380 381 382 383 384 385 386 387 388 389 390 391 392 393 394 395 396 397 398 399 400 401 402 403 404 405 406 407 408 409 410 411 412 413 414 415 416 417 418 419 420 421 422 423 424 425 426 427 428 429 430 431 432 433 434 435 436 437 438 439 440 441 442 443 444 445 446 447 448 449 450 451 452 453 454 455 456 457 458 459 460 461 462 463 464 465 466 467 468 469 470 471 472 473 474 475 476 477 478 479 480 481 482 483 484 485 486 487 488 489 490 491 492 493 494 495 496 497 498 499 500 501 502 503 504 505 506 507 508 509 510 511 512 513 514 515 516 517 518 519 520 521 522 523 524 525 526 527 528 529 530 531 532 533 534 535 536 537 538 539 540 541 542 543 544 545 546 547 548 549 550 551 552 553 554 555 556 557 558 559 560 561 562 563 564 565 566 567 568 569 570 571 572 573 574 575 576 577 578 579 580 581 582 583 584 585 586 587 588 589 590 591 592 593 594 595 596 597 598 599 600 601 602 603 604 605 606 607 608 609 610 611 612 613 614 615 616 617 618 619 620 621 622 623 624 625 626 627 628 629 630 631 632 633 634 635 636 637 638 639 640 641 642 643 644 645 646 647 648 649 650 651 | |

eval_splits

property

¶

Returns the evaluation splits of the task.

is_aggregate

property

¶

Whether the task is an aggregate of multiple tasks.

languages

property

¶

Returns the languages of the task.

modalities

property

¶

Returns the modalities of the task.

prediction_file_name

property

¶

The name of the prediction file in format {task_name}_predictions.json

superseded_by

property

¶

If the dataset is superseded by another dataset, return the name of the new dataset.

__init__(seed=42, **kwargs)

¶

The init function. This is called primarily to set the seed.

Parameters:

| Name | Type | Description | Default |

|---|---|---|---|

seed

|

int

|

An integer seed. |

42

|

kwargs

|

Any

|

arguments passed to subclasses. |

{}

|

Source code in mteb/abstasks/abstask.py

101 102 103 104 105 106 107 108 109 110 | |

calculate_descriptive_statistics(overwrite_results=False, num_proc=1)

¶

Calculates descriptive statistics from the dataset.

Parameters:

| Name | Type | Description | Default |

|---|---|---|---|

overwrite_results

|

bool

|

Whether to overwrite existing results. If False and results already exist, the existing results will be loaded from cache. |

False

|

num_proc

|

int

|

Number of processes to use for loading the dataset. |

1

|

Returns:

| Type | Description |

|---|---|

dict[str, DescriptiveStatistics]

|

A dictionary containing descriptive statistics for each split. |

Source code in mteb/abstasks/abstask.py

374 375 376 377 378 379 380 381 382 383 384 385 386 387 388 389 390 391 392 393 394 395 396 397 398 399 400 401 402 403 404 405 406 407 408 409 410 411 412 413 414 415 416 417 418 419 420 421 422 423 424 425 426 427 428 429 430 431 432 433 434 435 | |

calculate_metadata_metrics(overwrite_results=False)

¶

Old name of calculate_descriptive_statistics, kept for backward compatibility.

Source code in mteb/abstasks/abstask.py

437 438 439 440 441 442 443 | |

check_if_dataset_is_superseded()

¶

Check if the dataset is superseded by a newer version.

Source code in mteb/abstasks/abstask.py

112 113 114 115 116 117 | |

dataset_transform(num_proc=1)

¶

A transform operations applied to the dataset after loading.

This method is useful when the dataset from Huggingface is not in an mteb compatible format.

Override this method if your dataset requires additional transformation.

Parameters:

| Name | Type | Description | Default |

|---|---|---|---|

num_proc

|

int

|

Number of processes to use for the transformation. |

1

|

Source code in mteb/abstasks/abstask.py

119 120 121 122 123 124 125 126 127 128 | |

evaluate(model, split='test', subsets_to_run=None, *, encode_kwargs, prediction_folder=None, num_proc=1, **kwargs)

¶

Evaluates an MTEB compatible model on the task.

Parameters:

| Name | Type | Description | Default |

|---|---|---|---|

model

|

MTEBModels

|

MTEB compatible model. Implements a encode(sentences) method, that encodes sentences and returns an array of embeddings |

required |

split

|

str

|

Which split (e.g. "test") to be used. |

'test'

|

subsets_to_run

|

list[HFSubset] | None

|

List of huggingface subsets (HFSubsets) to evaluate. If None, all subsets are evaluated. |

None

|

encode_kwargs

|

EncodeKwargs

|

Additional keyword arguments that are passed to the model's |

required |

prediction_folder

|

Path | None

|

Folder to save model predictions |

None

|

num_proc

|

int

|

Number of processes to use for loading the dataset or processing. |

1

|

kwargs

|

Any

|

Additional keyword arguments that are passed to the _evaluate_subset method. |

{}

|

Returns:

| Type | Description |

|---|---|

Mapping[HFSubset, ScoresDict]

|

A dictionary with the scores for each subset. |

Raises:

| Type | Description |

|---|---|

TypeError

|

If the model is a CrossEncoder and the task does not support CrossEncoders. |

TypeError

|

If the model is a SearchProtocol and the task does not support Search. |

Source code in mteb/abstasks/abstask.py

130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 178 179 180 181 182 183 184 185 186 187 188 189 190 191 192 193 194 195 196 197 198 199 200 201 202 203 204 205 206 207 208 209 | |

fast_load()

¶

Deprecated. Load all subsets at once, then group by language. Using fast loading has two requirements:

- Each row in the dataset should have a 'lang' feature giving the corresponding language/language pair

- The datasets must have a 'default' config that loads all the subsets of the dataset (see more here)

Source code in mteb/abstasks/abstask.py

355 356 357 358 359 360 361 362 363 364 365 366 367 368 369 370 371 372 | |

filter_eval_splits(eval_splits)

¶

Filter the evaluation splits of the task.

Parameters:

| Name | Type | Description | Default |

|---|---|---|---|

eval_splits

|

Sequence[str] | None

|

A list of evaluation splits to keep. If None, all splits are kept. |

required |

Returns:

| Type | Description |

|---|---|

Self

|

The filtered task |

Source code in mteb/abstasks/abstask.py

467 468 469 470 471 472 473 474 475 476 477 | |

filter_languages(languages, script=None, hf_subsets=None, exclusive_language_filter=False)

¶

Filter the languages of the task.

Parameters:

| Name | Type | Description | Default |

|---|---|---|---|

languages

|

Sequence[str] | None

|

list of languages to filter the task by can be either a 3-letter language code (e.g. "eng") or also include the script (e.g. "eng-Latn") |

required |

script

|

Sequence[str] | None

|

A list of scripts to filter the task by. Will be ignored if language code specified the script. If None, all scripts are included. If the language code does not specify the script the intersection of the language and script will be used. |

None

|

hf_subsets

|

Sequence[HFSubset] | None

|

A list of huggingface subsets to filter on. This is useful if a dataset have multiple subsets containing the desired language, but you only want to test on one. An example is STS22 which e.g. have both "en" and "de-en" which both contains English. |

None

|

exclusive_language_filter

|

bool

|

Some datasets contains more than one language e.g. for STS22 the subset "de-en" contain eng and deu. If exclusive_language_filter is set to False both of these will be kept, but if set to True only those that contains all the languages specified will be kept. |

False

|

Returns:

| Type | Description |

|---|---|

Self

|

The filtered task |

Source code in mteb/abstasks/abstask.py

479 480 481 482 483 484 485 486 487 488 489 490 491 492 493 494 495 496 497 498 499 500 501 502 503 504 505 506 507 508 509 510 511 512 513 514 515 516 517 518 519 520 521 522 523 524 525 526 527 | |

load_data(num_proc=1, **kwargs)

¶

Loads dataset from HuggingFace hub

This is the main loading function for Task. Do not overwrite this, instead we recommend using dataset_transform, which is called after the

dataset is loaded using datasets.load_dataset.

Parameters:

| Name | Type | Description | Default |

|---|---|---|---|

num_proc

|

int

|

Number of processes to use for loading the dataset. |

1

|

kwargs

|

Any

|

Additional keyword arguments passed to the load_dataset function. Keep for forward compatibility. |

{}

|

Source code in mteb/abstasks/abstask.py

326 327 328 329 330 331 332 333 334 335 336 337 338 339 340 341 342 343 344 345 346 347 348 349 350 351 352 353 | |

push_dataset_to_hub(repo_name, num_proc=1)

¶

Push the dataset to the HuggingFace Hub.

Parameters:

| Name | Type | Description | Default |

|---|---|---|---|

repo_name

|

str

|

The name of the repository to push the dataset to. |

required |

num_proc

|

int

|

Number of processes to use for loading the dataset. |

1

|

Examples:

>>> import mteb

>>> task = mteb.get_task("Caltech101")

>>> repo_name = f"myorg/{task.metadata.name}"

>>> # Push the dataset to the Hub

>>> task.push_dataset_to_hub(repo_name)

Source code in mteb/abstasks/abstask.py

585 586 587 588 589 590 591 592 593 594 595 596 597 598 599 600 601 602 603 604 | |

stratified_subsampling(dataset_dict, seed, splits=['test'], label='label', n_samples=2048)

staticmethod

¶

Subsamples the dataset with stratification by the supplied label.

Parameters:

| Name | Type | Description | Default |

|---|---|---|---|

dataset_dict

|

DatasetDict

|

the DatasetDict object. |

required |

seed

|

int

|

the random seed. |

required |

splits

|

list[str]

|

the splits of the dataset. |

['test']

|

label

|

str

|

the label with which the stratified sampling is based on. |

'label'

|

n_samples

|

int

|

Optional, number of samples to subsample. Default is max_n_samples. |

2048

|

Returns:

| Type | Description |

|---|---|

DatasetDict

|

A subsampled DatasetDict object. |

Source code in mteb/abstasks/abstask.py

279 280 281 282 283 284 285 286 287 288 289 290 291 292 293 294 295 296 297 298 299 300 301 302 303 304 305 306 307 308 309 310 311 312 313 314 315 316 317 318 319 320 321 322 323 324 | |

unload_data()

¶

Unloads the dataset from memory

Source code in mteb/abstasks/abstask.py

638 639 640 641 642 643 644 645 646 | |

Multimodal Tasks¶

Tasks that support any modality (text, image, etc.) inherit from the following abstract class. Retrieval tasks support multimodal input (e.g. image + text queries and image corpus or vice versa).

mteb.abstasks.retrieval.AbsTaskRetrieval

¶

Bases: AbsTask

Abstract class for retrieval experiments.

Attributes:

| Name | Type | Description |

|---|---|---|

dataset |

dict[str, dict[str, RetrievalSplitData]]

|

A nested dictionary where the first key is the subset (language or "default"), the second key is the split (e.g., "train", "test"), and the value is a RetrievalSplitData object. |

ignore_identical_ids |

bool

|

If True, identical IDs in queries and corpus are ignored during evaluation. |

k_values |

Sequence[int]

|

A sequence of integers representing the k values for evaluation metrics. |

skip_first_result |

bool

|

If True, the first result is skipped during evaluation |

abstask_prompt |

Prompt to use for the task for instruction model if not prompt is provided in TaskMetadata.prompt. |

Source code in mteb/abstasks/retrieval.py

115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 178 179 180 181 182 183 184 185 186 187 188 189 190 191 192 193 194 195 196 197 198 199 200 201 202 203 204 205 206 207 208 209 210 211 212 213 214 215 216 217 218 219 220 221 222 223 224 225 226 227 228 229 230 231 232 233 234 235 236 237 238 239 240 241 242 243 244 245 246 247 248 249 250 251 252 253 254 255 256 257 258 259 260 261 262 263 264 265 266 267 268 269 270 271 272 273 274 275 276 277 278 279 280 281 282 283 284 285 286 287 288 289 290 291 292 293 294 295 296 297 298 299 300 301 302 303 304 305 306 307 308 309 310 311 312 313 314 315 316 317 318 319 320 321 322 323 324 325 326 327 328 329 330 331 332 333 334 335 336 337 338 339 340 341 342 343 344 345 346 347 348 349 350 351 352 353 354 355 356 357 358 359 360 361 362 363 364 365 366 367 368 369 370 371 372 373 374 375 376 377 378 379 380 381 382 383 384 385 386 387 388 389 390 391 392 393 394 395 396 397 398 399 400 401 402 403 404 405 406 407 408 409 410 411 412 413 414 415 416 417 418 419 420 421 422 423 424 425 426 427 428 429 430 431 432 433 434 435 436 437 438 439 440 441 442 443 444 445 446 447 448 449 450 451 452 453 454 455 456 457 458 459 460 461 462 463 464 465 466 467 468 469 470 471 472 473 474 475 476 477 478 479 480 481 482 483 484 485 486 487 488 489 490 491 492 493 494 495 496 497 498 499 500 501 502 503 504 505 506 507 508 509 510 511 512 513 514 515 516 517 518 519 520 521 522 523 524 525 526 527 528 529 530 531 532 533 534 535 536 537 538 539 540 541 542 543 544 545 546 547 548 549 550 551 552 553 554 555 556 557 558 559 560 561 562 563 564 565 566 567 568 569 570 571 572 573 574 575 576 577 578 579 580 581 582 583 584 585 586 587 588 589 590 591 592 593 594 595 596 597 598 599 600 601 602 603 604 605 606 607 608 609 610 611 612 613 614 615 616 617 618 619 620 621 622 623 624 625 626 627 628 629 630 631 632 633 634 635 636 637 638 639 640 641 642 643 644 645 646 647 648 649 650 651 652 653 654 655 656 657 658 659 660 661 662 663 664 665 666 667 668 669 670 671 672 673 674 675 676 677 678 679 680 681 682 683 684 685 686 687 688 689 690 691 692 693 694 695 696 697 698 699 700 701 702 703 704 705 706 707 708 709 710 711 712 713 714 715 716 717 | |

convert_to_reranking(top_ranked_path, top_k=10)

¶

Converts a reranking task to re-ranking by loading predictions from previous model run where the prediction_folder was specified.

Parameters:

| Name | Type | Description | Default |

|---|---|---|---|

top_ranked_path

|

str | Path

|

Path to file or folder with the top ranked predictions. |

required |

top_k

|

int

|

Number of results to load. |

10

|

Returns:

| Type | Description |

|---|---|

Self

|

The current task reformulated as a reranking task |

Raises:

| Type | Description |

|---|---|

FileNotFoundError

|

If the specified path does not exist. |

ValueError

|

If the loaded top ranked results are not in the expected format. |

Source code in mteb/abstasks/retrieval.py

668 669 670 671 672 673 674 675 676 677 678 679 680 681 682 683 684 685 686 687 688 689 690 691 692 693 694 695 696 697 698 699 700 701 702 703 704 705 706 707 708 709 710 711 712 713 714 715 716 717 | |

convert_v1_dataset_format_to_v2(num_proc)

¶

Convert dataset from v1 (from self.queries, self.document) format to v2 format (self.dotaset).

Source code in mteb/abstasks/retrieval.py

151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 178 179 180 181 182 183 184 185 186 187 188 189 190 191 192 193 194 195 196 197 198 199 200 201 202 203 204 205 206 207 208 209 210 211 212 213 214 215 216 217 218 219 220 221 222 223 224 225 226 227 228 229 230 231 232 233 234 235 236 237 238 239 240 241 242 243 244 245 246 247 248 249 250 251 252 253 254 255 256 257 258 | |

evaluate(model, split='test', subsets_to_run=None, *, encode_kwargs, prediction_folder=None, num_proc=1, **kwargs)

¶

Evaluate the model on the retrieval task.

Parameters:

| Name | Type | Description | Default |

|---|---|---|---|

model

|

MTEBModels

|

Model to evaluate. Model should implement the SearchProtocol or be an Encoder or CrossEncoderProtocol. |

required |

split

|

str

|

Split to evaluate on |

'test'

|

subsets_to_run

|

list[HFSubset] | None

|

Optional list of subsets to evaluate on |

None

|

encode_kwargs

|

EncodeKwargs

|

Keyword arguments passed to the encoder |

required |

prediction_folder

|

Path | None

|

Folder to save model predictions |

None

|

num_proc

|

int

|

Number of processes to use |

1

|

**kwargs

|

Any

|

Additional keyword arguments passed to the evaluator |

{}

|

Returns:

| Type | Description |

|---|---|

Mapping[HFSubset, ScoresDict]

|

Dictionary mapping subsets to their evaluation scores |

Source code in mteb/abstasks/retrieval.py

296 297 298 299 300 301 302 303 304 305 306 307 308 309 310 311 312 313 314 315 316 317 318 319 320 321 322 323 324 325 326 327 328 329 330 331 332 333 334 335 | |

load_data(num_proc=1, **kwargs)

¶

Load the dataset for the retrieval task.

Source code in mteb/abstasks/retrieval.py

260 261 262 263 264 265 266 267 268 269 270 271 272 273 274 275 276 277 278 279 280 281 282 283 284 285 286 287 288 289 290 291 292 293 294 | |

task_specific_scores(scores, qrels, results, hf_split, hf_subset)

¶

Calculate task specific scores. Override in subclass if needed.

Parameters:

| Name | Type | Description | Default |

|---|---|---|---|

scores

|

dict[str, dict[str, float]]

|

Dictionary of scores |

required |

qrels

|

RelevantDocumentsType

|

Relevant documents |

required |

results

|

dict[str, dict[str, float]]

|

Retrieval results |

required |

hf_split

|

str

|

Split to evaluate on |

required |

hf_subset

|

str

|

Subset to evaluate on |

required |

Source code in mteb/abstasks/retrieval.py

452 453 454 455 456 457 458 459 460 461 462 463 464 465 466 467 468 469 | |

mteb.abstasks.retrieval_dataset_loaders.RetrievalSplitData

¶

Bases: TypedDict

A dictionary containing the corpus, queries, relevant documents, instructions, and top-ranked documents for a retrieval task.

Attributes:

| Name | Type | Description |

|---|---|---|

corpus |

CorpusDatasetType

|

The corpus dataset containing documents. Should have columns |

queries |

QueryDatasetType

|

The queries dataset containing queries. Should have columns |

relevant_docs |

RelevantDocumentsType

|

A mapping of query IDs to relevant document IDs and their relevance scores. Should have columns |

top_ranked |

TopRankedDocumentsType | None

|

A mapping of query IDs to a list of top-ranked document IDs. Should have columns |

Source code in mteb/abstasks/retrieval_dataset_loaders.py

28 29 30 31 32 33 34 35 36 37 38 39 40 41 | |

mteb.abstasks.classification.AbsTaskClassification

¶

Bases: AbsTask

Abstract class for classification tasks

Attributes:

| Name | Type | Description |

|---|---|---|

dataset |

dict[HFSubset, DatasetDict] | None

|

Hugging Face dataset containing the data for the task. Should have train split (split name can be changed by train_split. Must contain the following columns:

text: str (for text) or PIL.Image (for image). Column name can be changed via |

evaluator_model |

SklearnModelProtocol

|

The model to use for evaluation. Can be any sklearn compatible model. Default is |

samples_per_label: Number of samples per label to use for training the evaluator model. Default is 8. n_experiments: Number of experiments to run. Default is 10. train_split: Name of the split to use for training the evaluator model. Default is "train". label_column_name: Name of the column containing the labels. Default is "label". input_column_name: Name of the column containing the input data. Default is "text". abstask_prompt: Prompt to use for the task for instruction model if not prompt is provided in TaskMetadata.prompt.

Source code in mteb/abstasks/classification.py

102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 178 179 180 181 182 183 184 185 186 187 188 189 190 191 192 193 194 195 196 197 198 199 200 201 202 203 204 205 206 207 208 209 210 211 212 213 214 215 216 217 218 219 220 221 222 223 224 225 226 227 228 229 230 231 232 233 234 235 236 237 238 239 240 241 242 243 244 245 246 247 248 249 250 251 252 253 254 255 256 257 258 259 260 261 262 263 264 265 266 267 268 269 270 271 272 273 274 275 276 277 278 279 280 281 282 283 284 285 286 287 288 289 290 291 292 293 294 295 296 297 298 299 300 301 302 303 304 305 306 307 308 309 310 311 312 313 314 315 316 317 318 319 320 321 322 323 324 325 326 327 328 329 330 331 332 333 334 335 336 337 338 339 340 341 342 343 344 345 346 347 348 349 350 351 352 353 354 355 356 357 358 359 360 361 362 363 364 365 366 367 368 369 370 371 372 373 374 375 376 377 378 379 380 381 382 383 384 385 386 387 388 389 | |

evaluate(model, split='test', subsets_to_run=None, *, encode_kwargs, prediction_folder=None, num_proc=1, **kwargs)

¶

Evaluate a model on the classification task.

Differs from other tasks as it requires train split.

Source code in mteb/abstasks/classification.py

131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 178 179 180 181 182 183 184 185 186 187 188 189 190 191 | |

mteb.abstasks.multilabel_classification.AbsTaskMultilabelClassification

¶

Bases: AbsTaskClassification

Abstract class for multioutput classification tasks

Attributes:

| Name | Type | Description |

|---|---|---|

dataset |

dict[HFSubset, DatasetDict] | None

|

Huggingface dataset containing the data for the task. Dataset must contain columns specified by input_column_name and label_column_name. Input column must contain the text or image to be classified, and label column must contain a list of labels for each example. |

input_column_name |

str

|

Name of the column containing the input text. |

label_column_name |

str

|

Name of the column containing the labels. |

samples_per_label |

int

|

Number of samples to use pr. label. These samples are embedded and a classifier is fit using the labels and samples. |

evaluator_model |

SklearnModelProtocol

|

Classifier to use for evaluation. Must implement the SklearnModelProtocol. |

Source code in mteb/abstasks/multilabel_classification.py

70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 178 179 180 181 182 183 184 185 186 187 188 189 190 191 192 193 194 195 196 197 198 199 200 201 202 203 204 205 206 207 208 209 210 211 212 213 214 215 216 217 218 219 220 221 222 223 224 225 226 227 228 229 230 231 232 233 234 235 236 237 238 239 240 241 242 243 244 245 246 247 248 249 250 251 252 253 254 255 256 | |

mteb.abstasks.clustering.AbsTaskClustering

¶

Bases: AbsTask

Abstract class for Clustering tasks.

This class embeds the corpus sentences then samples N samples from the corpus and clusters them. The similarity then is calculated using the V-measure metric, which is invariant to the permutation of the labels. This approach is then repeated K times.

There are two ways to specify how a dataset is downsampled max_document_to_embed and max_fraction_of_documents_to_embed.

If both parameters are set to None, no downsampling is done in self._evaluate_subset().

Only one of these two parameters can be not None at the same time.

If the clustering is hierarchical, and more than one label is specified in order for each observation, V-measures are calculated in the outlined way on each of the levels separately.

Attributes:

| Name | Type | Description |

|---|---|---|

dataset |

dict[HFSubset, DatasetDict] | None

|

A HuggingFace Dataset containing the data for the clustering task. Must contain the following columns |

max_fraction_of_documents_to_embed |

float | None

|

Fraction of documents to embed for clustering. |

max_document_to_embed |

int | None

|

Maximum number of documents to embed for clustering. |

max_documents_per_cluster |

int

|

Number of documents to sample for each clustering experiment. |

n_clusters |

int

|

Number of clustering experiments to run. |

k_mean_batch_size |

int

|

Batch size to use for k-means clustering. |

max_depth |

Maximum depth to evaluate clustering. If None, evaluates all levels. |

|

input_column_name |

str

|

Name of the column containing the input sentences or data points. |

label_column_name |

str

|

Name of the column containing the true cluster labels. |

abstask_prompt |

Prompt to use for the task for instruction model if not prompt is provided in TaskMetadata.prompt. |

Source code in mteb/abstasks/clustering.py

126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 178 179 180 181 182 183 184 185 186 187 188 189 190 191 192 193 194 195 196 197 198 199 200 201 202 203 204 205 206 207 208 209 210 211 212 213 214 215 216 217 218 219 220 221 222 223 224 225 226 227 228 229 230 231 232 233 234 235 236 237 238 239 240 241 242 243 244 245 246 247 248 249 250 251 252 253 254 255 256 257 258 259 260 261 262 263 264 265 266 267 268 269 270 271 272 273 274 275 276 277 278 279 280 281 282 283 284 285 286 287 288 289 290 291 292 293 294 295 296 297 298 299 300 301 302 303 304 305 306 | |

mteb.abstasks.sts.AbsTaskSTS

¶

Bases: AbsTask

Abstract class for STS experiments.

Attributes:

| Name | Type | Description |

|---|---|---|

dataset |

dict[HFSubset, DatasetDict] | None

|

Dataset or dict of Datasets for different subsets (e.g., languages). Dataset must contain columns specified in column_names and a 'score' column. Columns in column_names should contain the text or image data to be compared. |

column_names |

tuple[str, str]

|

Tuple containing the names of the two columns to compare. |

min_score |

int

|

Minimum possible score in the dataset. |

max_score |

int

|

Maximum possible score in the dataset. |

abstask_prompt |

Prompt to use for the task for instruction model if not prompt is provided in TaskMetadata.prompt. |

|

input1_prompt_type |

PromptType | None

|

Type of prompt of first input. Used for asymmetric tasks. |

input2_prompt_type |

PromptType | None

|

Type of prompt of second input. Used for asymmetric tasks. |

Source code in mteb/abstasks/sts.py

92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 178 179 180 181 182 183 184 185 186 187 188 189 190 191 192 193 194 195 196 197 198 199 200 201 202 203 204 205 206 207 208 209 210 211 212 213 214 215 216 217 218 219 220 221 222 223 224 225 226 227 228 229 230 231 232 233 234 235 236 237 238 239 240 241 242 243 244 245 246 247 248 249 250 251 252 253 254 255 256 257 258 259 260 261 | |

mteb.abstasks.zeroshot_classification.AbsTaskZeroShotClassification

¶

Bases: AbsTask

Abstract class for ZeroShot Classification tasks for any modality.

The similarity between an input (can be image or text) and candidate text prompts, such as this is a dog/this is a cat.

Attributes:

| Name | Type | Description |

|---|---|---|

dataset |

dict[HFSubset, DatasetDict] | None

|

Huggingface dataset containing the data for the task. Dataset must contain columns specified by self.input_column_name and self.label_column_name. |

input_column_name |

str

|

Name of the column containing the inputs (image or text). |

label_column_name |

str

|

Name of the column containing the labels (str). |

Source code in mteb/abstasks/zeroshot_classification.py

70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 178 179 180 181 182 183 184 185 186 187 188 189 190 191 192 | |

get_candidate_labels()

¶

Return the text candidates for zeroshot classification

Source code in mteb/abstasks/zeroshot_classification.py

190 191 192 | |

mteb.abstasks.regression.AbsTaskRegression

¶

Bases: AbsTaskClassification

Abstract class for regression tasks

self.load_data() must generate a huggingface dataset with a split matching self.metadata.eval_splits, and assign it to self.dataset. It must contain the following columns: text: str value: float

Attributes:

| Name | Type | Description |

|---|---|---|

dataset |

dict[HFSubset, DatasetDict] | None

|

A HuggingFace Dataset containing the data for the regression task. It must contain the following columns: input_column_name and label_column_name. Input can be any text or images, and label must be a continuous value. |

input_column_name |

str

|

Name of the column containing the text inputs. |

label_column_name |

str

|

Name of the column containing the continuous values. |

train_split |

str

|

Name of the training split in the dataset. |

n_experiments |

int

|

Number of experiments to run with different random seeds. |

n_samples |

int

|

Number of samples to use for training the regression model. If the dataset has fewer samples than n_samples, all samples are used. |

abstask_prompt |

Prompt to use for the task for instruction model if not prompt is provided in TaskMetadata.prompt. |

|

evaluator_model |

SklearnModelProtocol

|

The model to use for evaluation. Can be any sklearn compatible model. Default is |

Source code in mteb/abstasks/regression.py

77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 178 179 180 181 182 183 184 185 186 187 188 189 190 191 192 193 194 195 196 197 198 199 200 201 202 203 204 205 206 207 208 209 210 211 212 213 214 215 216 217 218 219 220 221 222 223 224 225 226 227 228 229 230 231 232 233 234 235 236 237 238 239 240 | |

stratified_subsampling(dataset_dict, seed, splits=['test'], label='value', n_samples=2048, n_bins=10)

staticmethod

¶

Subsamples the dataset with stratification by the supplied label, which is assumed to be a continuous value.

The continuous values are bucketized into n_bins bins based on quantiles.

Parameters:

| Name | Type | Description | Default |

|---|---|---|---|

dataset_dict

|

DatasetDict

|

the DatasetDict object. |

required |

seed

|

int

|

the random seed. |

required |

splits

|

list[str]

|

the splits of the dataset. |

['test']

|

label

|

str

|

the label with which the stratified sampling is based on. |

'value'

|

n_samples

|

int

|

Optional, number of samples to subsample. |

2048

|

n_bins

|

int

|

Optional, number of bins to bucketize the continuous label. |

10

|

Returns:

| Type | Description |

|---|---|

DatasetDict

|

A subsampled DatasetDict object. |

Source code in mteb/abstasks/regression.py

138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 178 179 180 181 182 183 184 185 186 187 188 189 190 191 192 | |

mteb.abstasks.clustering_legacy.AbsTaskClusteringLegacy

¶

Bases: AbsTask

Legacy abstract task for clustering. For new tasks, we recommend using AbsTaskClustering because it is faster, more sample-efficient, and produces more robust statistical estimates.

Attributes:

| Name | Type | Description |

|---|---|---|

dataset |

dict[HFSubset, DatasetDict] | None

|

A HuggingFace Dataset containing the data for the clustering task. It must contain the following columns:

sentences: List of inputs to be clustered. Can be text, images, etc. Name can be changed via |

input_column_name |

str

|

The name of the column in the dataset that contains the input sentences or data points. |

label_column_name |

str

|

The name of the column in the dataset that contains the true cluster labels. |

abstask_prompt |

Prompt to use for the task for instruction model if not prompt is provided in TaskMetadata.prompt. |

Source code in mteb/abstasks/clustering_legacy.py

72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 178 179 180 181 182 183 184 185 186 187 188 189 190 191 192 193 194 195 196 197 198 199 200 201 202 203 204 205 206 207 208 209 210 211 212 213 214 215 216 217 218 219 220 221 222 223 224 225 226 227 228 229 230 231 232 233 234 235 236 237 238 239 240 241 242 243 244 245 246 247 248 249 250 251 252 253 254 | |

Text Tasks¶

mteb.abstasks.text.bitext_mining.AbsTaskBitextMining

¶

Bases: AbsTask

Abstract class for BitextMining tasks

The similarity is computed between pairs and the results are ranked.

Attributes:

| Name | Type | Description |

|---|---|---|

dataset |

dict[HFSubset, DatasetDict] | None

|

A HuggingFace dataset containing the data for the task. It must contain the following columns sentence1 and sentence2 for the two texts to be compared. |

parallel_subsets |

If true task language pairs should be in one split as column names, otherwise each language pair should be a subset. |

|

abstask_prompt |

Prompt to use for the task for instruction model if not prompt is provided in TaskMetadata.prompt. |

Source code in mteb/abstasks/text/bitext_mining.py

62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 178 179 180 181 182 183 184 185 186 187 188 189 190 191 192 193 194 195 196 197 198 199 200 201 202 203 204 205 206 207 208 209 210 211 212 213 214 215 216 217 218 219 220 221 222 223 224 225 226 227 228 229 230 231 232 233 234 235 236 237 238 239 240 241 242 243 244 245 246 247 248 249 250 251 252 253 254 255 256 257 258 259 260 261 262 263 264 265 266 267 268 269 270 271 272 273 274 275 276 277 278 279 280 281 282 283 284 285 286 287 288 289 290 291 292 293 294 295 296 297 298 299 300 301 302 303 304 305 306 | |

evaluate(model, split='test', subsets_to_run=None, *, encode_kwargs, prediction_folder=None, num_proc=1, **kwargs)

¶

Added load for "parallel" datasets

Source code in mteb/abstasks/text/bitext_mining.py

77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 | |

mteb.abstasks.pair_classification.AbsTaskPairClassification

¶

Bases: AbsTask

Abstract class for PairClassificationTasks

The similarity is computed between pairs and the results are ranked. Average precision is computed to measure how well the methods can be used for pairwise pair classification.

Attributes:

| Name | Type | Description |

|---|---|---|

dataset |

dict[HFSubset, DatasetDict] | None

|

A HuggingFace dataset containing the data for the task. Should contain the following columns: sentence1, sentence2, labels. |

input1_column_name |

str

|

The name of the column containing the first sentence in the pair. |

input2_column_name |

str

|

The name of the column containing the second sentence in the pair. |

label_column_name |

str

|

The name of the column containing the labels for the pairs. Labels should be 0 or 1. |

abstask_prompt |

Prompt to use for the task for instruction model if not prompt is provided in TaskMetadata.prompt. |

|

input1_prompt_type |

PromptType | None

|

Type of prompt of first input. Used for asymmetric tasks. |

input2_prompt_type |

PromptType | None

|

Type of prompt of second input. Used for asymmetric tasks. |

Source code in mteb/abstasks/pair_classification.py

67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 178 179 180 181 182 183 184 185 186 187 188 189 190 191 192 193 194 195 196 197 198 199 200 201 202 203 204 205 206 207 208 209 210 211 212 213 214 215 216 217 218 219 220 221 222 223 224 225 226 227 228 229 230 231 232 233 234 235 236 237 238 239 240 241 242 243 244 245 246 247 248 249 250 251 252 253 254 255 256 257 258 259 260 261 262 263 264 265 266 267 268 269 270 271 272 273 274 275 276 277 278 279 280 281 282 283 284 285 286 287 288 289 290 291 292 293 294 295 296 297 298 299 300 301 302 303 304 305 306 307 308 309 310 311 312 313 314 315 316 317 318 319 320 321 322 323 324 325 326 327 328 329 330 331 332 333 334 335 336 337 338 339 340 341 342 343 344 345 346 347 348 349 350 351 352 353 354 355 356 357 358 359 360 361 362 363 364 365 366 367 368 369 370 371 372 373 374 | |

mteb.abstasks.text.summarization.AbsTaskSummarization

¶

Bases: AbsTask

Abstract class for summarization experiments.

Attributes:

| Name | Type | Description |

|---|---|---|

dataset |

dict[HFSubset, DatasetDict] | None

|

HuggingFace dataset containing the data for the task. Should have columns: - text: The original text to be summarized. - human_summaries: A list of human-written summaries for the text. - machine_summaries: A list of machine-generated summaries for the text. - relevance: A list of relevance scores (integers) corresponding to each machine summary, indicating how relevant each summary is to the original text. |

min_score |

int

|

Minimum possible relevance score (inclusive). |

max_score |

int

|

Maximum possible relevance score (inclusive). |

human_summaries_column_name |

str

|

Name of the column containing human summaries. |

machine_summaries_column_name |

str

|

Name of the column containing machine summaries. |

text_column_name |

str

|

Name of the column containing the original text. |

relevancy_column_name |

str

|

Name of the column containing relevance scores. |

abstask_prompt |

Prompt to use for the task for instruction model if not prompt is provided in TaskMetadata.prompt. |

Source code in mteb/abstasks/text/summarization.py

57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 178 179 180 181 182 183 184 185 186 187 188 189 190 | |

mteb.abstasks.text.reranking.AbsTaskReranking

¶

Bases: AbsTaskRetrieval

Reranking task class.

Deprecated

This class is deprecated and will be removed in future versions. Please use the updated retrieval tasks instead.

You can add your task name to mteb.abstasks.text.reranking.OLD_FORMAT_RERANKING_TASKS to load it in new format.

You can reupload it using task.push_dataset_to_hub('your/repository') after loading the data.

For dataformat and other information, see AbsTaskRetrieval.

Source code in mteb/abstasks/text/reranking.py